Email Marketing Insights in 2025 from Neil Patel

Neil Patel recently shared powerful tips on email marketing success—think cleaner lists, smarter personalization, and value-packed content. His key message? Email isn’t dead; it’s evolving.

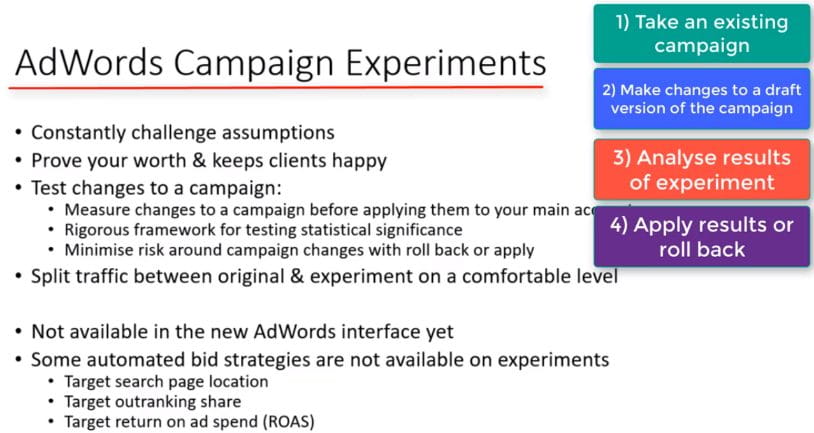

AdWords ‘Campaign Drafts and Experiments’ is one of my favorite advanced tools within AdWords. This tool was previously known as AdWords Campaign Experiments (ACE). I have reviewed many accounts over the years and very often even in larger AdWords accounts, I rarely see advertisers using this feature. It is a real shame because it is a fantastic feature which undoubtedly will help you improve your account.

In this section we will review what the tool does and understand the settings. I’ll show you how I use this tool and give you some ideas to test. Then we are going to look at an example and we’ll create our own draft campaign and learn how to roll it out as an experiment. Finally, we can analyse and interpret the results and take actionable changes away.

AdWords campaign experiments allow is to take an existing active campaign, implement changes to it and review the outcome of those changes. The tool allows us to review the changes to the experiment version of the campaign compared with the original non-changed version of the campaign.

Essentially, we are splitting the campaign in 2 and A/B testing an experimental version with changes versus an original unchanged version.

Based on the reported outcomes we can decide whether the changes we made are effective in achieving our goal. If we achieve a statically significant positive outcome, we can apply the experimental changes back into the campaign. Otherwise we can choose to roll back the experiment and revert to the original campaign prior to the changes. This serves as a rigorous and effective method of implementing and testing optimisations to an account.

There are several important reasons every AdWords account manager should be utilising this tool. Firstly, I believe that every AdWords account should constantly be tested. There is no such thing as a set-and-forget strategy where you can say “the account is running perfectly, we don’t want to ruin anything, and we don’t need to be doing much”. Consider that the system behind AdWords is dynamic in several ways. It is an auction-based environment, which means that bids will be constantly changing, this effects budgets. Competitors are also dynamic, ad copy will be changing and strategies evolving all the time. These are just some of the reasons your strategy should constantly be tested and evolving itself. Your campaigns can always be improving

Also, consider that as an account manager, your clients are paying you to optimise your account. Testing and showing results of tests (even if negative), will also keep your clients happy, because you are proving that you are trying new things and testing assumptions using a data driven strategy.

Experiments allow you to test existing assumptions without completely altering your account. One of the biggest pushbacks to advertisers making changes, is that they often don’t want to change the status quo. If it ain’t broke, don’t fix it! Experiments allow us to challenge assumptions with minimal risk.

The experiment provides a preview of results within a given timeframe. This provides higher confidence to roll out changes back to the original campaign. The experiment results show whether the changes were positive in a statistically significant way. So, we can roll out changes only if they have had a significant effect on CTR or CVR.

The best part feature of AdWords Experiments is its ability to minimize risk and save time. If my campaign changes go horribly wrong and my conversion rate drops by 10%, I don’t need to go trawling through weeks of changes and working out how to reverse them. This would be incredibly time consuming. Instead, I can simply roll back the experiment.

Using this methodology also forces us to test one major change at a time for significance, rather than testing multiple changes ongoing. This again is a better approach.

The experiment framework allows us to set a traffic split. We can decide whether we will split the traffic 50/50 between experiment and original or any other number. For example, to minimise risk and impact even further we could send only 20% of traffic to the experiment version.

The following are key examples of how you can use campaign drafts and experiments within your day to day AdWords practice. Many of these I regularly use within my own client accounts. The possibilities are endless.

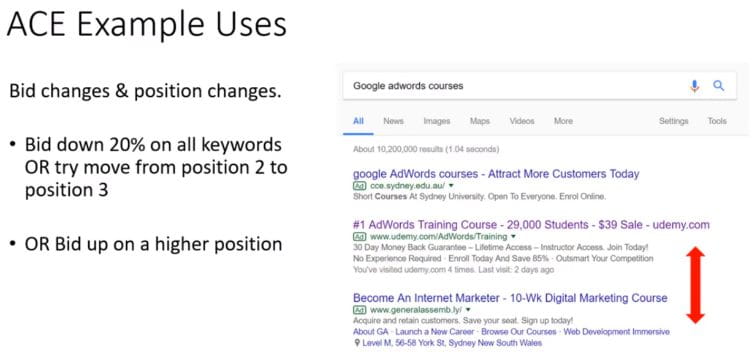

Testing bid and position changes is at the heart of what many AdWords specialists spend their time doing. We know that both the bid and the QS of our keywords determine the Ad rank. By changing the keyword bids, we can affect our ad position.

Campaign experiments can be very useful to test whether the bid changes we make are effective. One test you can try is to bid down, 20% across the board on all keywords within the campaign. Bidding down this much could move your ad from position 2 to 3. Usually this would be a drastic move to make but implementing this within an experiment that will run for a limited time is less risky.

Running this experiment is a great starting point for implementing a testing strategy into your account. The 20% bid change might do nothing, but that just means that after the testing period is up, you can implement a new experiment, this time bidding down another 20% more. You could end up finding a better position lower on the page with a better ROI, saving your advertiser a lot of money.

For advertisers looking to increase traffic increasing bids would be the most obvious test to implement, experiments are a fantastic way to test this incrementally without blowing out the CPA of the campaign and losing all previous performance.

Experiments can be an excellent tool for testing traffic to a new landing page. The next time you build a new landing page, instead of automatically sending AdWords to the new page, why not test it against the old one with an experiment.

This is also far better than creating 2 adgroups, one with the old landing page and one with the new. There is no double up on keyword data, changes can be rolled back faster, less budget wasted.

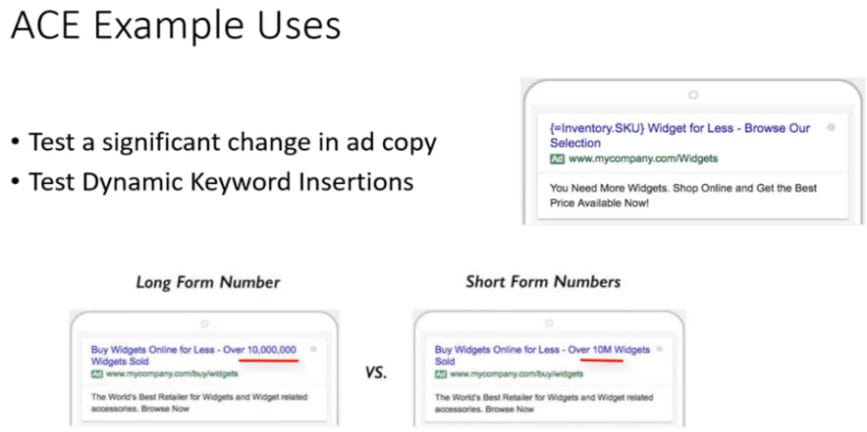

Changing ad copy can be quite risky. I had an instance with one of my clients, who wanted to put “best” in all his Ads.

I suggested to them that we test this change via an experiment. This would give visibility into whether “best” is an effective differentiator for customers in ad copy. We added “best” to 30% of all the Ad copy in the account and ran an experiment. Results showed that there was a significant positive difference. The client was happy and so was I!

We were looking to implement dynamic keyword insertion across a client account. We believed that it would make ads more relevant, but we wanted to be sure before we made a major change across the account. We created a new draft with both the regular static ads and duplicate ads with DKI running. It turned out in our case that DKI ads did not push the needle.

I’d suggest testing all sorts of things. Some examples:

Etc…

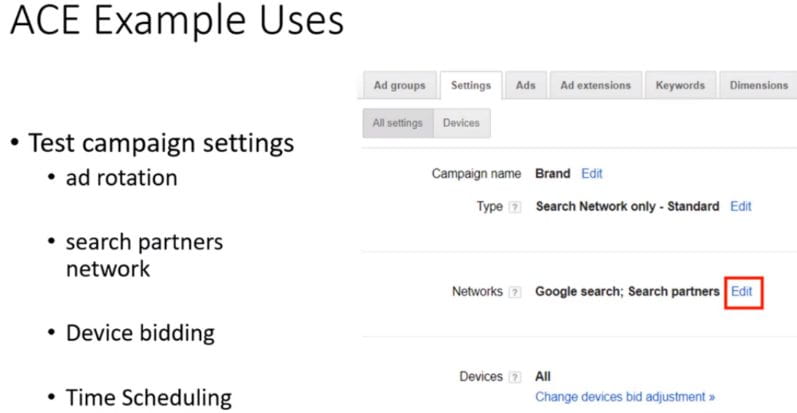

There are many different settings that you can adjust at the campaign level. Instead of just changing these settings and hoping for the best, they should be tested.

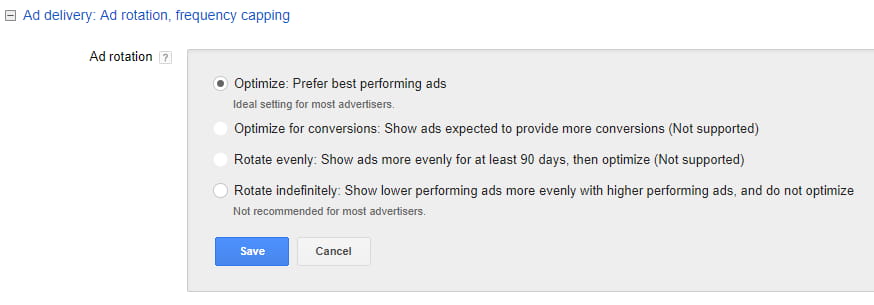

Ad rotation settings allow you to rotate evenly or let AdWords optimize for you. If you can’t decide, I suggest you test what works for:

Search partners: Test whether you should opt-in for the search partner network.

Device bidding: Test having different bids for mobiles and desktops

Time scheduling: Test changes to bids at various times in the day. Does having a schedule make your account more effective? Test removing your current schedule which could be out of date

Removal of major keywords: Its often the case that there are one or two major keywords in an industry. We often think these are crucial to have in the account and we are scared to remove them in case the volume drops dramatically. The reality could be that they are money drainers and it is better to spend your budget on longer tail higher converting, lower volume terms. It’s this kind of change that is the perfect candidate for an AdWords test. The risk is low.

At the time of writing, there are some limitations to what we can test. Unfortunately, some of the automated bid strategies are unavailable for testing with experiments at the moment. These include: ‘target search page location’, ‘target outranking share’ and ‘target ROAS’. It’s a real shame as many advertisers are hesitant to implement automated bidding strategies. If we could only test their value against manual bidding and see for ourselves that they are effective, I’m confident their uptake would be stronger.

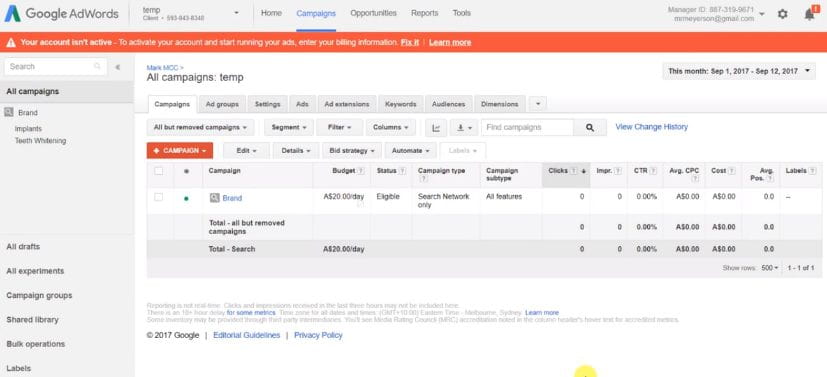

To setup and AdWords Campaign Experiment, we first select an existing campaign, this will be our base campaign for running an experiment on. Then from within the ad group view of that campaign, we select ‘draft & experiments’ from the menu > create new.

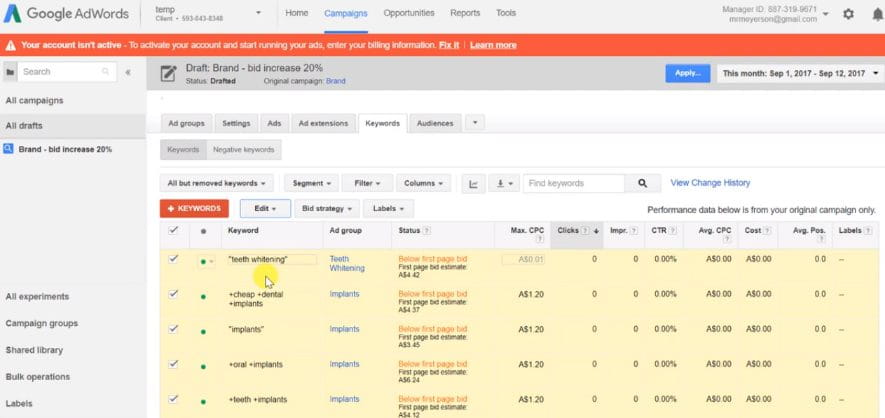

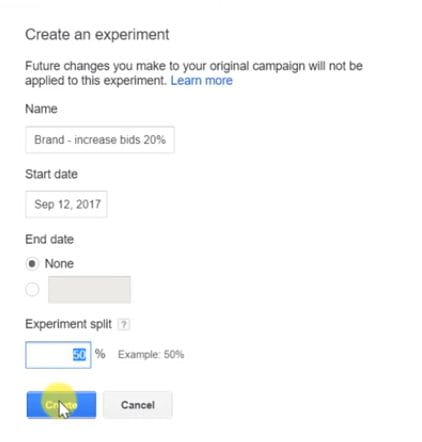

The system will now prompt you to give the draft a name. I recommend being as descriptive as possible, include the name of the original campaign, what you are testing and when you want to run it to. For Example: ‘Brand Campaign – bid increase 20% – run to April 15th.

We now enter the draft mode where we can make changes to our campaign draft that we want to test. This could be any of the ideas discussed in the previous section. If for example you want to test bid increases, then select all your keywords > change max cpc. bids > increase bids by 20%. You should see afterwards all your bids increased.

Once we are happy with the changes we convert the draft into an experiment by clicking the apply button. When prompted to give the experiment a name, I usually use the same name as the draft.

Select the start date as tomorrow. For the end date I’d recommend setting it to ‘None’, because if you change it to a certain date, the experiment will stop at that date and you won’t be able to restart it.

For the traffic split, to have a fair test that doesn’t run to long I always recommend 50/50. However, if you want to be extra cautious about a change then you can always split less traffic to the experiment.

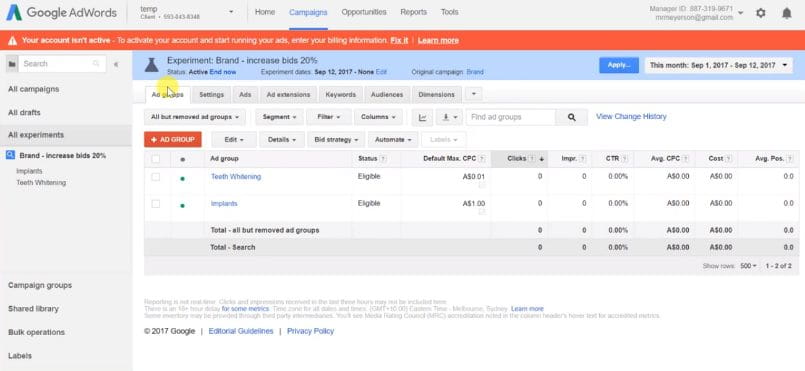

Once we click Apply the system begins creating the experiment. Wait a few seconds, hit refresh and then jump in. Under your experiments tab you will see that you now have an ‘active’ or ‘scheduled’ experiment. From this tab you can view all your experiments and click into them to view real time results.

If you navigate back to your campaigns tab and click on your original campaign from which you created the experiment, you can see a beaker indicating an experiment is running on this campaign. You can jump straight to the experiment by clicking on it

In the experiment view you can easily compare the original to the experiment. After the experiment has run for the allotted time, if you are happy with the results, click the apply button. You will be presented two options.

Option one: Update the original campaign. This option allows you to apply the changes you made to your experiment back into the original campaign. From now on the experiment will end and the original will continue with the recent changes.

Option two: Convert the experiment to a new campaign. In this scenario, the experiment will turn into a new campaign which will run alongside the original campaign. They will be 2 separate campaigns independent of each other

We also have the option to end the experiment. If the results do not turn out advantageous, we can end the experiment without applying the changes. This means that the original campaign will continue as it was originally before the experiment began.

One of the easiest traps to fall into when running experiments is to create a false positive result because of a time design issue. This often occurs when the experimenter increases the run time of the experiment to achieve a significant or a desired result. Many times, this occurs without knowing that increasing the run time will create a false positive. Because if we increase the run time for another week and we get a significant result, if we increase another week further we may get a non-significant result, so altering the period to suit our needs is not a fair test.

Even in well-designed university experiments this kind of thing often occurs. It is important to set a time frame before the experiment starts running and stick to this. This is the reason I include the end dates within the experiment title.

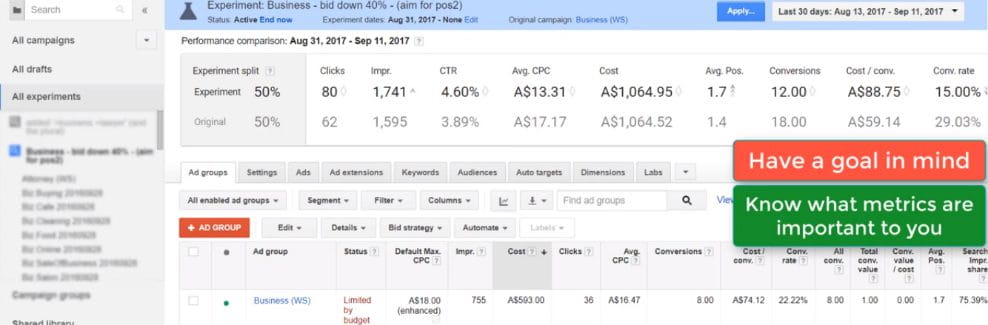

Let’s jump in and have a look at the experiment results section.

Aside from setting a pre-planned time frame for the experiment to run, it is also important to have a goal or KPI in mind for the experiment.

The KPI could be a % increase (or decrease) in CTR, conversions, CPA or conversion rate.

The % change is important to know at the outset, so you have a criterion that you will stick to.

In the Experiments tab in AdWords, you can view all your experiments that are running or have run in the past.

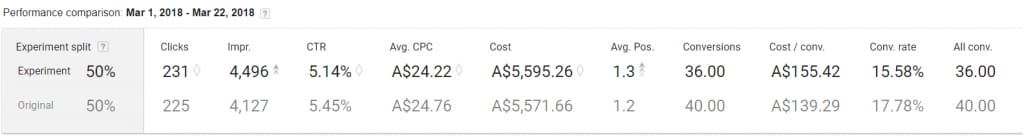

Once you select your experiment, the first thing to do is to set the date range. Then you can view changes between the original and the experiment version for the specified period.

In the example above, the experiment decreased the CPC’s by 20% with an aim to test whether lower bids could achieve a lower Cost Per Conversion. We can see that the experiment had slightly lower bids, $24.22 vs $24.76. This lead to a slightly lower position on the SERP. 1.3 vs 1.2. But in terms of results, the opposite effect to what we were hoping occurred. CPA increased, and conversion rate decreased. This shows us that decreasing the bids slightly haven’t really increased performance.

The next steps for me would be to retest this. I would probably want to be more aggressive next time and try and move the position more, ideally down to position 2. If again I receive mediocre performance I am building up more evidence that being in a higher position is better for this campaign in terms of CPA. My next steps would then be to test something else rather then bid positions considering that this bid position is working in our favour.

Note that even where the experiment results are poor, this still provides us a positive outcome. We have discovered that our bid position is optimal and that bid changes at this stage is not what will help us improve the account.

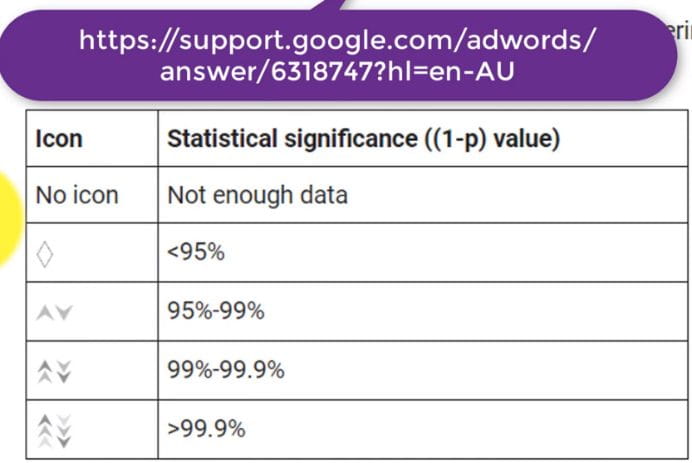

You might have noticed from the screenshot of the experiment results that there are some symbols in the results. These symbols are important and worth taking the time to understand.

The symbols tell us when the results are statistically significant and to what degree of significance. If there is no symbol, then there is not enough data yet. A diamond tells us that the results are not significant, the results were within the 95% range and not different enough. The arrows tell us there is a significant result, where more arrows show higher P values, which are higher degrees of significance. You can learn more about these symbols here: https://support.google.com/adwords/answer/6318747?hl=en-AU

Once your experiment is complete, you have 2 options:

Either way its good practice to keep a record of experiments that you have run and what the results were so that you can build on top of experiments and also present results to clients. This is good practice for a healthy AdWords account.

Neil Patel recently shared powerful tips on email marketing success—think cleaner lists, smarter personalization, and value-packed content. His key message? Email isn’t dead; it’s evolving.

SEO copywriting: user-focused, keyword-rich content. Optimize URLs, titles, and meta descriptions. Balance search engines and audience needs to boost rankings and drive traffic.

Brand owners can control their Amazon listings and remove unauthorized sellers by enrolling in Brand Registry. This grants priority in content updates, access to A+ content, and tools like Brand Transparency and Project Zero.

Subscribe to receive exclusive industry

insights & updates

Copyright © 2014 – 2025 One Egg. All rights reserved.

Subscribe to receive exclusive industry insights & updates